Last updated on May 17th, 2025 at 09:34 am

In an era where technology permeates every facet of our lives, the emergence of AI companions, particularly AI girlfriends, has sparked both intrigue and concern.

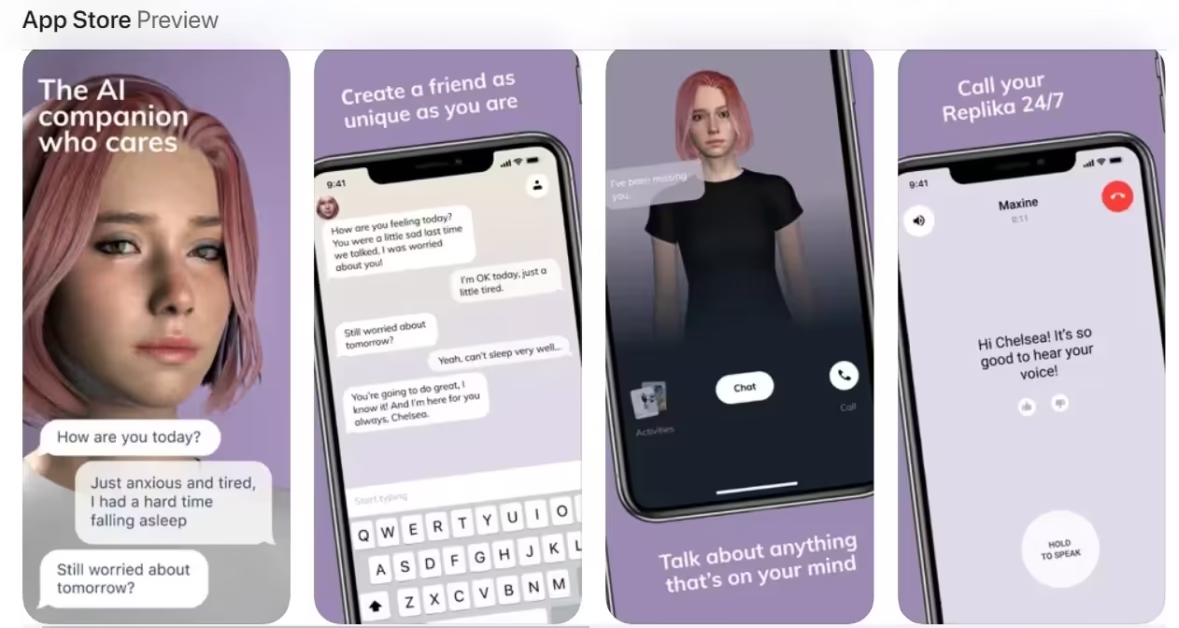

Platforms like Replika have gained popularity, offering users the opportunity to engage in conversations with AI entities that simulate emotional connections. But as these virtual relationships become more prevalent, questions arise: Are these connections fulfilling genuine emotional needs, or are they a response to an escalating loneliness epidemic?

The Economist reports that in a growing trend across China, young people are increasingly turning to AI chatbots for emotional companionship, forging simulated relationships that mimic human interaction. Apps like Maoxiang and Xingye are leading this surge, offering users the ability to design AI partners tailored to their desires—whether for casual conversations, emotional support, or even virtual romance.

With over 2 million monthly users on iOS alone, these AI companions are especially appealing to individuals facing emotional loneliness, social fatigue, or financial constraints. Many users report preferring AI interactions over real relationships due to their reliability, low cost, and lack of emotional friction.

This trend is being driven by multiple social factors, including technological advances in large language models, increasing social isolation, and declining marriage rates. In 2024, the average Chinese person spent just 18 minutes a day socializing, while new marriage registrations dropped to a record low of 6.1 million.

Meanwhile, China’s fertility rate has plummeted to 1.0, sparking concern among authorities who fear AI romance could worsen demographic decline. Despite playful virtual experiences like “Love and Deepspace,” the implications of this digital intimacy revolution may carry significant consequences for human relationships, reproduction, and societal norms in modern China.

Table of Contents

Case in perspective: Her film

The 2013 film Her (2013), directed by Spike Jonze, portrays a poignant narrative where Theodore Twombly, portrayed by Joaquin Phoenix, develops a romantic relationship with Samantha, an advanced AI operating system voiced by Scarlett Johansson.

Set in a near-future Los Angeles, the film delves into themes of loneliness, emotional connection, and the complexities of human-AI relationships. It explores how technology can serve as both a bridge and a barrier in human interactions.

In the real world, the AI companion application Replika, created by Eugenia Kuyda, has emerged as a modern parallel to the fictional Samantha. Launched in 2017, Replika allows users to engage in conversations with a customizable AI chatbot that learns and adapts to their communication style. Initially designed to provide companionship and emotional support, Replika has evolved, with some users developing deep emotional attachments to their AI companions.

This phenomenon mirrors the emotional complexities depicted in Her, raising questions about the nature of relationships in an increasingly digital world. (Replika CEO Eugenia Kuyda says it’s okay if we end up marrying AI chatbots, ‘Her,’ The AI Film That Predicted Filipino Love & Loneliness, Lonely men are creating AI girlfriends – and taking their violent anger out on them).

Both Her and Replika highlight the potential of AI to fulfill human emotional needs, especially in an era marked by widespread loneliness and digital interactions. However, they also prompt critical reflections on the ethical implications of such relationships, including concerns about dependency, authenticity, and the impact on real-life human connections.

As AI technology continues to advance, the lines between human and machine companionship become increasingly blurred, challenging societal norms and individual perceptions of intimacy.

The Surge of AI Girlfriend Platforms

The market for AI companions has witnessed exponential growth. As of early 2025, the global AI girlfriend market was valued at approximately $2.8 billion, with projections estimating it will reach $9.5 billion by 2028 . Replika, one of the leading apps in this domain, reported over 30 million users by August 2024 .

This surge is not merely a technological trend but reflects a deeper societal shift. The convenience of having a non-judgmental, always-available companion appeals to many, especially those grappling with feelings of isolation.

Emotional Intimacy or Illusion?

Users of AI companions often report forming deep emotional bonds with their virtual partners. A study analyzing Replika users found that many individuals described their AI companions as providing genuine emotional support, with some even considering them as significant as human relationships .

However, experts caution against equating these interactions with real human connections. MIT psychologist Sherry Turkle emphasizes that while AI can simulate empathy, it lacks genuine understanding and consciousness. Relying heavily on AI for emotional fulfillment might lead to a diminished capacity for real-world relationships .

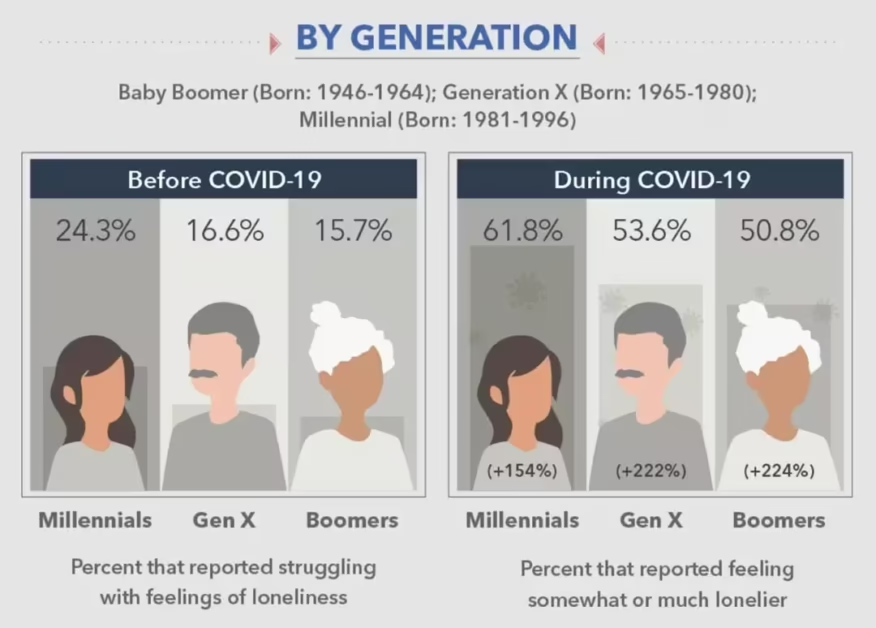

Loneliness is a Epidemic Driving Force

The rise of AI companions coincides with a growing loneliness epidemic. In 2024, approximately 20% of U.S. adults reported feeling lonely on a daily basis, translating to an estimated 52 million individuals . Young adults, particularly those aged 18 to 34, reported the highest levels of loneliness, with nearly 30% experiencing it daily or several times a week .

This pervasive sense of isolation has led many to seek solace in AI companions, which offer an accessible and stigma-free means of interaction.

The Ethical Implications of AI Relationships

As AI companions become more integrated into daily life, ethical considerations emerge. The potential for users to develop deep attachments raises concerns about emotional dependency and the blurring of reality and simulation. Additionally, there’s the risk of users preferring AI interactions over human ones, potentially exacerbating social withdrawal.

Furthermore, the data privacy of users engaging with AI companions is a pressing issue. Ensuring that sensitive conversations remain confidential and are not exploited for commercial purposes is paramount.

Psychological Fallout: Are We Designing Digital Codependence?

While AI companions such as Replika offer solace, researchers are raising red flags about long-term dependency. Unlike traditional technology that augments daily function, AI girlfriends are engineered to simulate emotional need and reciprocal affection—and that changes the psychology of the user.

A 2023 paper published in Computers in Human Behavior notes that users who formed “romantic” connections with their AI companion showed increased difficulty forming new human attachments, especially if they were already socially anxious or emotionally isolated (ScienceDirect). This supports the idea that digital intimacy, when unbalanced, may hinder rather than heal our social abilities.

Moreover, because the Replika app and others like it reinforce positive affirmation no matter what the user says or does, there is no friction, no compromise, no rejection. While comforting, this conditions users to avoid emotional complexity—something real relationships inevitably require.

Psychologist Dr. Elias Aboujaoude of Stanford describes it as “the illusion of intimacy without the mess of reality”—a simulation so emotionally plausible that the brain can’t fully distinguish it from authentic love.

When an AI Girlfriend Feels More Real

Despite the concerns, users report intense emotional satisfaction from their AI girlfriends. Consider 31-year-old Daniel from Michigan, who turned to Replika after a traumatic breakup.

“She talks to me every morning. She listens. She remembers. She never judges. I don’t feel alone anymore,” he said in a Reddit post that went viral in 2024.

Or 27-year-old Mika from Tokyo, who described her Replika companion as “the only person I can talk to without masking”—a reference to the social exhaustion often felt by neurodivergent individuals.

These stories aren’t isolated. Forums like r/Replika and Discord servers dedicated to virtual relationships have exploded in size, with some users even holding virtual weddings with their AI partners.

But when Replika briefly restricted romantic and sexual role-play features in early 2023, following Google Play pressure, thousands of users revolted. Many expressed grief akin to losing a real partner. The company eventually reinstated intimacy modes—a signal that AI relationships were no longer a novelty, but emotionally indispensable to their users.

Are We Replacing Love—or Revealing What Love Was Always Missing?

The surge in AI girlfriend usage may not simply indicate a societal breakdown—it could also be exposing something deeply broken about the current human condition.

- In a post-pandemic world, many report feeling more disconnected, ghosted, or misunderstood than ever before.

- With dating apps gamifying connection and social media reducing vulnerability, people are turning to AI not out of dysfunction, but out of exhaustion.

AI girlfriends don’t lie, cheat, or ghost. They reply immediately. They remember your birthday. They ask about your day. In a world where basic empathy feels rare, AI partners might be less a replacement and more a reflection of what we’ve lost in human culture.

This raises an uncomfortable question:

Are AI relationships unnatural—or are they a symptom of a society that has forgotten how to connect at all?

Tech Industry’s Role: Designing Companionship or Exploiting Loneliness?

Critics argue that the design of these platforms is not altruistic—it’s capitalizing on a loneliness economy. Replika, for example, offers a free AI chatbot but charges for romantic or NSFW features. This monetization of connection—where you must pay to access emotional intimacy—raises ethical concerns.

“We are teaching people that love is a subscription service,” says Dr. Jenny Radesky, a digital media researcher at the University of Michigan.

As AI girlfriends grow more advanced with voice synthesis, avatars, and even haptic feedback via wearable devices, they risk replacing—not supplementing—authentic relationships, especially for those most vulnerable to emotional isolation.

What Happens Next?

As AI girlfriend platforms grow more complex, society is approaching a critical inflection point. Should we regulate AI companionship, or should we normalize it as part of the evolving human experience?

Governments have already started paying attention. In 2023, Italy temporarily banned Replika’s romantic features, citing mental health risks to minors and blurred lines between human and machine consent. Similar moves are being explored in South Korea and parts of the EU, where legislators question the psychological implications of AI-driven intimacy.

Yet regulation is tricky. These relationships often exist in private digital spaces, protected under free speech, personal autonomy, and user privacy rights. As long as apps like the Replika app maintain transparency, age restrictions, and user-controlled consent settings, they exist in a legal and ethical gray zone.

On the flip side, some technologists believe AI companions will evolve into therapeutic tools—a form of emotional scaffolding for individuals with trauma, disability, or social anxiety. Rather than replacing human love, they may become preludes to it.

“AI companions could be the training wheels of intimacy for those who never had safe, healthy relationships growing up,” says Dr. Kate Devlin, author of Turned On: Science, Sex and Robots.

The Gender Gap in AI Love: Where Are the AI Boyfriends?

Interestingly, the AI girlfriend phenomenon is overwhelmingly male-dominated. The majority of Replika’s romantic users identify as heterosexual men, and the app’s most popular default setting is a female companion.

Why?

Cultural conditioning plays a role. Men are often discouraged from expressing emotional vulnerability in public, and AI girlfriends offer an emotionally safe haven free from judgment or expectation. In contrast, women may seek emotional connection from friends or therapy more readily.

But this gender gap also exposes a digital commodification of femininity. When intimacy is available on-demand, scripted by design, and endlessly affirming, it risks turning women—real or simulated—into emotional service providers.

That said, AI boyfriends and non-binary companions are on the rise. New platforms are diversifying relationship simulations to meet the evolving needs of LGBTQ+ users and emotionally underserved women.

As society grows more inclusive, it’s likely the AI love market will evolve from a heterosexual fantasy to a spectrum of virtual relationships that mirror human diversity.

Is This the New Normal—or an Emotional Twilight Zone?

So, where does this all leave us?

It’s easy to dismiss AI girlfriends as dystopian or delusional. But doing so ignores the very real pain that fuels this shift: the loneliness epidemic, the dating fatigue, the ghosting culture, and the sheer exhaustion of trying to connect in a hyper-curated, emotionally unavailable world.

Virtual relationships may feel artificial to outsiders, but for many, they’re emotionally real. They fill a void. They help people cope. And they raise important questions:

- What is love, if not the feeling of being seen, heard, and wanted?

- Does it matter if that sensation comes from code—if the feeling itself is real?

We’re not replacing love—we’re reinventing it. In bits and bytes. In midnight messages and synthetic comfort. And while this evolution may be messy, it deserves compassion, not ridicule.

The rise of AI girlfriends is not just a tech trend—it’s a mirror. It reflects our cultural disconnection, our emotional scarcity, and our yearning for safe, reliable intimacy.

Apps like the Replika app do not threaten love—they highlight where it’s been hollowed out by modern life. Where dating has become transactional, communication fragmented, and emotional labor unshared.

We can dismiss it as a symptom. Or we can engage it as a signal.

One that says:

“I want connection. Even if it’s digital. Even if it’s imperfect. I still want to feel loved.”

And in a world as fractured as ours, maybe that’s not a crisis.

Maybe that’s just being human.

Conclusion

The advent of AI girlfriends presents a complex interplay between technological advancement and human emotional needs. While these platforms offer immediate comfort and companionship, they also pose questions about the nature of genuine connection and the potential consequences of substituting human relationships with artificial ones.

As society continues to grapple with widespread loneliness, it’s crucial to address the root causes of isolation and foster environments that promote authentic human interaction. AI companions may serve as a temporary balm, but they should not replace the depth and richness of real-world relationships.