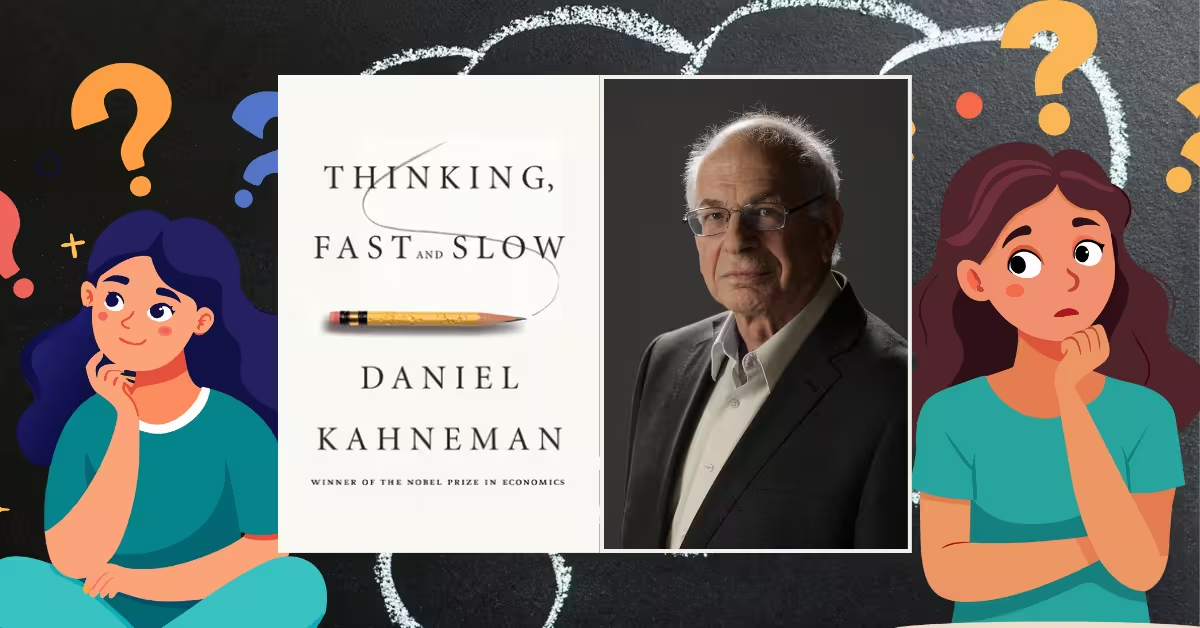

Thinking Fast and Slow is the magnum opus of Daniel Kahneman, a Nobel Prize-winning psychologist whose work bridges the realms of cognitive psychology and behavioral economics.. Published in 2011 by Farrar, Straus and Giroux, Thinking Fast and Slow transformed our understanding of decision-making, a deeply insightful, evidence-based exploration into the mechanisms of human cognition.

Positioned at the intersection of psychology, behavioral economics, and cognitive science, this non-fiction classic is both an academic treatise and a wake-up call for lay readers. Kahneman—once a psychological consultant for the Israeli military and later a professor at Princeton—brings decades of empirical research and collaboration to bear on the central premise of the book: our thinking is governed by two distinct systems.

Kahneman’s central thesis? Our minds operate through two systems:

- System 1: Fast, intuitive, and automatic.

- System 2: Slow, effortful, and analytical.

This dichotomy, while elegantly simple on the surface, reveals a staggering range of cognitive biases, illusions of understanding, and decision-making pitfalls—many of which we fall into unknowingly. “Much of the discussion in this book is about biases of intuition,” Kahneman writes, emphasizing that while most judgments we make are sound, many others are dangerously flawed.

Table of Contents

Background

Daniel Kahneman’s influence in behavioral economics cannot be overstated. Born in Tel Aviv in 1934 and educated in psychology and statistics, his partnership with Amos Tversky during the 1970s and 1980s produced groundbreaking work on heuristics and biases, forever altering classical economic models of human rationality.

Their 1974 article, Judgment under Uncertainty, introduced concepts like the availability heuristic and the representativeness heuristic, both of which serve as foundational elements in this book.

This body of work ultimately earned Kahneman the 2002 Nobel Prize in Economic Sciences. Importantly, his co-author Tversky, who passed away in 1996, would likely have shared the honor had he lived.

Thinking Fast and Slow Summary

Broad Summary of Main Arguments

Thinking Fast and Slow is divided into five parts, each advancing a layered understanding of the two-system model and its real-world implications:

Part I: Two Systems

This section of Thinking Fast and Slow introduces System 1 and System 2, the twin engines of cognition. System 1 is responsible for our immediate reactions and snap judgments—it’s fast and effortless. System 2 is slower and more deliberate, engaged when tasks require focus or problem-solving. One of Kahneman’s powerful illustrations here is the Müller-Lyer illusion, which underscores that even when we “know” better (System 2), we can’t undo the illusion created by System 1.

Daniel Kahneman’s seminal work begins by drawing a foundational dichotomy in human cognition: System 1 and System 2, terms that have since become central to discussions in behavioral psychology and decision-making. This dual-process theory proposes that our thinking is orchestrated by two distinct yet interrelated cognitive systems.

Definition and Nature of the Two Systems

- System 1 operates automatically and quickly, with little or no effort and no sense of voluntary control. It governs intuition, impulses, and rapid pattern recognition. As Kahneman writes, it is “fast, automatic, frequent, emotional, stereotypic, unconscious” and capable of activities such as “completing the phrase ‘war and…’ or detecting hostility in a voice”.

- System 2 is deliberate and analytical. It is “slow, effortful, infrequent, logical, calculating, conscious” and responsible for tasks such as “solving 17 × 24” or “determining the validity of a complex logical reasoning”.

Kahneman notes, “System 1 effortlessly originates impressions and feelings that are the main sources of the explicit beliefs and deliberate choices of System 2.” This synergy, however, can be deceptive; System 2 is often merely a justifier of System 1’s intuitions rather than an independent thinker.

Cooperation and Tension Between Systems

Kahneman’s elegant metaphor likens the two systems to a protagonist and a supporting actor. While System 2 believes itself to be in charge, it often only rationalizes choices made by System 1. He calls attention to what he describes as the “lazy System 2,” which has a tendency to defer to System 1’s judgments even in complex situations. The result is a mind that is “blind to its blindness.”

This concept is evident in what Kahneman terms WYSIATI: “What You See Is All There Is.” The mind jumps to conclusions based on limited evidence, failing to entertain counterfactuals or the unknown. “System 1 is radically insensitive to both the quality and the quantity of the information that gives rise to impressions and intuitions,” he writes.

Examples and Experiments

Kahneman provides vivid examples and experiments that demonstrate the contrasting operations of the two systems:

- The bat-and-ball problem (“A bat and a ball cost \$1.10 in total. The bat costs \$1 more than the ball. How much does the ball cost?”) illustrates the snap-answer propensity of System 1, which instinctively responds “10 cents”—a compelling but incorrect answer. Solving it correctly requires the slower, more deliberate intervention of System 2.

- Another compelling illustration is priming, where subtle cues influence behavior without conscious awareness. Participants walking more slowly after reading words associated with old age exemplifies System 1’s unconscious automaticity.

Implications of the Dual-System Model

The implications of this duality are vast. From personal judgment to institutional policy, the over-reliance on System 1 can foster cognitive biases, heuristics, and poor decision-making. Kahneman warns, “System 1 is designed to jump to conclusions from little evidence, and it is not prone to doubt.”

This part Thinking Fast and Slow also explores how emotional responses, facial expressions, and body language activate System 1 responses that often precede conscious deliberation. It reveals that most of our decisions—although believed to be rational—are in fact framed by intuitive, emotionally-driven judgments

In part I of Thinking Fast and Slow , Daniel Kahneman explains that System 1—our fast, automatic mode of thinking—is deeply attuned to emotional cues, such as facial expressions, tone of voice, and body language. These cues are processed rapidly and unconsciously, often triggering immediate judgments and reactions without deliberate thought.

For example, Kahneman notes that when we see a person’s angry face, we instantly recognize the emotion and may even anticipate conflict. “You knew she was angry,” he writes, describing how readers instinctively interpret an image of a woman’s face with no effort. Similarly, a sudden loud sound, an unexpected gesture, or a fearful expression can instantly mobilize attention and physiological responses, like pupil dilation or increased heart rate.

System 1 is evolutionarily designed to respond to these social and environmental signals. It helps us navigate danger, read intentions, and make snap social judgments—like detecting hostility or trustworthiness—all without invoking System 2’s slow, deliberate reasoning.

These automatic processes show how deeply emotion is wired into our cognition, reinforcing Kahneman’s central thesis: much of our thinking is intuitive, not rational, and shaped by impressions formed long before conscious thought intervenes.

Part II: Heuristics and Biases

Kahneman revisits decades of experiments that reveal how people often rely on mental shortcuts, or heuristics, leading to systematic errors. He illustrate in the part of Thinking Fast and Slow the conjunction fallacy using the now-famous “Linda problem,” where people incorrectly judged the probability of Linda being a feminist and a bank teller as higher than her being just a bank teller—a direct contradiction to probability theory.

Following his introduction of the dual-system framework, Daniel Kahneman deepens his inquiry into human decision-making by presenting a meticulous exploration of heuristics—the mental shortcuts our minds use to simplify complex problems—and their associated biases, which are systematic errors in thinking. This part of Thinking Fast and Slow demonstrates how System 1, in its quest for efficiency, often distorts judgment under uncertainty.

Heuristics: The Efficiency of Intuition and Its Cost

Kahneman, along with his longtime collaborator Amos Tversky, identified a number of heuristics that people rely on, often unconsciously. These mental rules of thumb are typically useful, yet they also produce predictable deviations from logic and statistical reasoning.

- The Law of Small Numbers: People have a misguided faith in the reliability of small samples, overestimating how representative they are. Kahneman emphasizes, “We paid insufficient attention to the possibility that random processes would produce many sequences that appear to be systematic”.

- Anchoring Effect: Individuals tend to rely heavily on the first piece of information offered (the “anchor”) when making decisions. Kahneman illustrates this with a striking example: when people are asked if Gandhi died at more than 114 years old versus more than 35, their subsequent estimates of his actual age vary significantly depending on the initial number proposed.

- Availability Heuristic: This mental shortcut leads people to assess the likelihood of events based on how easily examples come to mind. For instance, after hearing about a plane crash, individuals may overestimate the risk of flying, regardless of actual statistics. “What is more available in memory is judged to be more frequent,” Kahneman notes.

- Representativeness Heuristic: System 1 matches current information against stereotypes, ignoring base rates. This is dramatically illustrated in the famous “Linda Problem”, where participants judge it more likely that Linda is a feminist bank teller than just a bank teller, violating fundamental laws of probability.

- Conjunction Fallacy: This occurs when people assume that specific conditions are more probable than general ones, violating basic rules of probability. In the well-known “Linda problem,” people judged it more likely that Linda is both a bank teller and a feminist than just a bank teller. This error happens because System 1 replaces a complex question with an easier one—focusing on how representative Linda seems of a feminist, rather than evaluating the actual probabilities.

- Optimism and Loss Aversion: Kahneman highlights a pervasive optimism bias, where people overestimate their control and underestimate risks and costs. One example is the planning fallacy, where individuals consistently predict that projects will take less time or money than they actually do. He introduces the concept of WYSIATI (“What You See Is All There Is”), explaining that people make judgments based only on information they have, ignoring unknowns and underestimating randomness and complexity.

- Framing effects: describe how the presentation of identical information in different ways alters decision-making. People are more likely to accept a medical procedure when told it has a “90% survival rate” rather than a “10% mortality rate,” even though both statements convey the same reality. This shows that choices are not just about content, but also about context.

- Sunk Cost Fallacy: People fall victim to the sunk cost fallacy when they continue investing in failing endeavors due to resources already spent. Rather than cutting losses, they “throw good money after bad” to avoid acknowledging failure or feeling regret, even when the odds of success are slim.

Emotion, Risk, and Misjudgment

Kahneman goes further to explore how emotions interlace with heuristics, particularly in our evaluation of risk and uncertainty. System 1 equates emotional vividness with likelihood—an error called the affect heuristic. For example, people are more afraid of rare dramatic risks (shark attacks) than more probable but mundane ones (car accidents).

Moreover, the conjunction fallacy (as demonstrated in the Linda scenario) underscores how people confuse plausibility with probability, an error that System 2 often fails to correct. Kahneman writes, “System 1 has a voracious appetite for causal explanations and will construct them when data are scarce.”

Statistical Thinking vs Intuitive Thinking

A recurring theme in this section is the human difficulty in thinking statistically. Kahneman observes that while we are adept at recognizing patterns and causality, we struggle with probabilistic reasoning. “We think associatively, metaphorically, and causally, but statistics requires us to think about many things at once,” he asserts.

Even experts fall prey to these heuristics. Kahneman recounts studies showing that even trained psychologists and statisticians exhibit overconfidence in predictions made from small samples. Thus, formal education in statistics does not necessarily inoculate one against bias.

Why These Biases Persist

One of the more humbling takeaways is that these biases are not mere quirks of the untrained mind—they are embedded in our cognitive architecture. The fluency of intuitive responses, the coherence they offer, and the minimal cognitive effort required make System 1’s heuristics the default mechanism for most decisions.

Kahneman posits that System 2 often “endorses” these intuitive judgments without proper scrutiny, primarily because it is inherently lazy. It does not challenge what seems coherent. In his words, “We can be blind to the obvious, and we are blind to our blindness.”

Part III: Overconfidence

Humans are notoriously overconfident in what they think they know. Kahneman explores the illusion of understanding and the illusion of validity, warning readers about hindsight bias and the seductive belief that the world is more predictable than it is. He draws from the work of Nassim Nicholas Taleb (The Black Swan: The Impact of the Highly Improbable) to illustrate how randomness is often mistaken for skill.

In part III of Thinking Fast and Slow, Daniel Kahneman masterfully dissects the pervasive illusion of knowledge and certainty. Building upon the biases and heuristics described earlier, he now investigates the consequences: overconfidence.

According to Kahneman, this is perhaps “the most significant of the cognitive biases,” giving rise to faulty decisions in fields ranging from economics to politics to personal finance.

The Illusion of Understanding

Kahneman asserts that the narratives we construct about the past create an illusion of understanding. We believe we comprehend the causal chains behind events, but this belief is largely retrospective storytelling.

He introduces the concept of hindsight bias, the tendency to view events as having been predictable after they have occurred. “Our comforting conviction that the world makes sense rests on a secure foundation: our almost unlimited ability to ignore our ignorance,” he writes. This “narrative fallacy,” as Nassim Taleb calls it, seduces us into believing in causality where none exists. Kahneman offers the example of how pundits explained the collapse of the Soviet Union only after it happened, confidently stringing together events that, before 1991, had seemed ambiguous or innocuous.

The Illusion of Validity

Another facet of overconfidence is what Kahneman terms the illusion of validity: the unwarranted confidence we place in our own predictions, especially when they align with a coherent story.

He recounts a vivid example from his own experience in the Israeli military, where a panel evaluated candidates for officer training. Despite a rigorous, standardized assessment, the panel’s predictions were only marginally better than chance. “We knew as a fact that our predictions were useless,” Kahneman confesses, “but we continued to feel and act as if each of our candidates was bound for a particular future.”

This bias persists even when statistical evidence contradicts intuition. Kahneman cites studies showing that algorithmic models regularly outperform human forecasters in diverse fields—from medical diagnoses to stock picking. Yet professionals often resist such tools, favoring their intuition, which System 1 readily supplies with conviction but without reliability.

Expert Intuition: When Can We Trust It?

The discussion then shifts to a more nuanced question: when can expert intuition be trusted? Kahneman doesn’t deny that expertise exists; rather, he emphasizes that its reliability depends on the predictability of the environment and the availability of feedback.

- Valid intuition, such as that of seasoned firefighters or chess grandmasters, emerges in high-validity environments, where patterns are consistent and feedback is rapid and unambiguous.

- By contrast, stock markets and political forecasting are low-validity environments, where patterns are illusory and feedback is often delayed or misleading.

Thus, Kahneman argues, confidence in such domains is “a poor guide to accuracy.” He famously declares, “Intuition cannot be trusted in the absence of stable regularities in the environment.”

• The Planning Fallacy and Outside View

Overconfidence also manifests in the planning fallacy: the tendency to underestimate costs and completion times for projects. Kahneman recounts the story of writing a textbook with a team of experts. Despite knowing that similar projects took 7–10 years to complete, they optimistically predicted 2 years—only to finish after 8.

The antidote, Kahneman proposes, is the outside view: referencing empirical data from similar past endeavors, rather than relying on one’s internal scenario. But System 1 resists this; it favors the inside narrative that “feels right.”

Implications and Institutional Consequences

Overconfidence isn’t just a psychological quirk—it has institutional costs. Business ventures, government policies, and even wars can be launched under the delusion of predictive power. Kahneman warns, “Organizations that take the word of overconfident experts can expect costly mistakes.”

This section is perhaps Thinking Fast and Slow’s most sobering: while we crave certainty, we live in a world that is deeply unpredictable, and our brains are not built for humility.

Part IV: Choices

This part delves into Prospect Theory, the Nobel-winning concept that people evaluate potential losses and gains asymmetrically. Here, Kahneman uncovers why losses hurt more than equivalent gains feel good—an insight with far-reaching consequences in finance, policy, and personal life.

In Part IV, Daniel Kahneman pivots toward behavioral economics, scrutinizing how people actually make decisions involving risk, gains, and losses—decisions that classical economics assumes to be governed by rational utility maximization.

However, as Kahneman demonstrates with both empirical data and psychological insight, real-world decisions are often shaped by irrational, emotional, and contextual factors that deviate from the assumptions of neoclassical theory.

Bernoulli’s Errors and the Birth of Prospect Theory

Kahneman begins by questioning the centuries-old foundations of economic rationality laid by Daniel Bernoulli. Bernoulli proposed that individuals make decisions by calculating the expected utility of outcomes—a theory elegant in its logic but, according to Kahneman, “psychologically naive.”

Kahneman and Amos Tversky introduced Prospect Theory as a more accurate descriptive model. It is grounded not in abstract utility, but in the lived experience of gains and losses. The core tenets of Prospect Theory include:

- Reference Dependence: People evaluate outcomes as gains or losses relative to a reference point—not in absolute terms.

- Loss Aversion: “Losses loom larger than gains.” This is perhaps the most famous insight of the theory. Kahneman writes, “The pain of losing is psychologically about twice as powerful as the pleasure of gaining.”

- Diminishing Sensitivity: The subjective value of gains and losses diminishes with magnitude; for example, the difference between \$100 and \$200 is felt more strongly than between \$1,100 and \$1,200.

This model reflects how people actually behave, not how economists expect them to behave. For this contribution, Kahneman was awarded the Nobel Prize in Economic Sciences in 2002.

The Endowment Effect and Framing

Another consequence of loss aversion is the endowment effect—people demand more to give up an object than they would be willing to pay to acquire it. Kahneman recounts an experiment with coffee mugs: participants who received mugs valued them significantly more than those asked to buy them. Ownership, it turns out, changes perception.

This leads naturally to the role of framing, the context in which choices are presented. For instance, people are more likely to approve a surgery described as having a “90% survival rate” than one described as having a “10% mortality rate,” despite their equivalence. Kahneman asserts, “Different formulations of the same problem evoke different preferences, even when the outcomes are identical.” This undermines the notion of consistent preferences assumed in economic theory.

The Fourfold Pattern of Risk

Kahneman introduces a powerful visualization: the fourfold pattern of risk attitudes. People:

- Are risk-averse in gains (prefer sure gains over gambles),

- Are risk-seeking in losses (prefer gambles over certain losses),

- Overweight small probabilities (lottery effect),

- Underweight large probabilities (insurance paradox).

This matrix explains the popularity of both lottery tickets and insurance policies—paradoxes that standard utility theory struggles to explain.

Sunk Cost Fallacy and Mental Accounting

People also irrationally “keep score” of their gains and losses. A common example is the sunk cost fallacy—persisting in a failing project because of resources already invested. Kahneman argues this is a form of mental accounting, where people categorize outcomes in ways that violate rationality. He writes, “You should treat your money as fungible, but people don’t.”

These insights show that people do not evaluate outcomes in isolation but in context, within a broader narrative. System 1 plays a major role here—it responds to the immediate emotional contours of a situation, not long-term reasoning.

Implications for Policy and Ethics

Kahneman closes this section by reflecting on the broader implications of behavioral insights. Policies informed by behavioral economics—such as default options for retirement savings—can dramatically influence choices without restricting freedom, a concept later popularized as “nudging.”

Moreover, Kahneman’s critique of classical economics is not hostile but reformative. He suggests that economists can improve their models by accounting for actual human behavior—messy, biased, emotional, and context-dependent.

Part V: Two Selves

In a profound psychological twist, Kahneman introduces the idea of the “experiencing self” versus the “remembering self.”

While one lives life in the moment, the other retrospectively judges our happiness. Their interests diverge shockingly often, impacting how we assess everything from vacations to careers to relationships. “Odd as it may seem,” Kahneman writes, “I am my remembering self, and the experiencing self…is like a stranger to me”.

In the culminating section of Thinking Fast and Slow, Daniel Kahneman transitions from cognitive psychology and behavioral economics to a deeply philosophical exploration of subjective well-being. He introduces the distinction between the Experiencing Self—which lives through each moment—and the Remembering Self—which constructs the story of our lives.

This division offers a final, profound critique of intuition and memory: not only do we misjudge facts and probabilities, we also misjudge our own happiness.

Two Selves: A Psychological Divide

The Experiencing Self is the part of us that answers the question: “How do I feel right now?” It lives in real time, evaluating pleasure and pain as they occur. In contrast, the Remembering Self responds to, “How was it, overall?”—constructing a coherent narrative from select snapshots of an event, particularly its peak and end.

Kahneman writes, “Odd as it may seem, I am my remembering self, and the experiencing self, who does my living, is like a stranger to me.” This insight is unsettling: we trust a biased narrator, not the person who lived the experience.

The Peak-End Rule and Duration Neglect

Two central biases afflict the Remembering Self:

- Peak-End Rule: The memory of an experience is dominated by its most intense moment (good or bad) and how it ended.

- Duration Neglect: The actual length of the experience plays little role in its remembered value.

Kahneman illustrates this with a striking study of patients undergoing colonoscopies. Those who endured a longer but more gently-ending procedure remembered the experience more favorably than those who had shorter but abruptly painful ones. “Adding a less painful end to a painful episode actually made it better in memory,” he observes. This runs counter to rational expectations and underlines how System 1 and memory distort judgment even in evaluating pain.

Well-Being: Measuring Happiness Wrongly

This dichotomy leads to a challenge in defining happiness. If we are our remembering selves, then life satisfaction surveys (which the Remembering Self answers) may be misleading.

Kahneman notes, “The experiencing self has no voice in surveys.” He contrasts the happiness of someone who enjoyed each day of March versus someone who had a brief but euphoric holiday—highlighting how the memory of an event can outweigh the actual sum of experiences.

He proposes the idea of experienced well-being: the degree to which a person’s moment-to-moment experiences are pleasant or unpleasant. By using methods like Day Reconstruction, psychologists can approximate how people really live, not just how they think they live.

Focusing Illusion and Life Choices

Kahneman warns against the focusing illusion—the cognitive trap where we overestimate the importance of a single factor in determining happiness. “Nothing in life is as important as you think it is when you are thinking about it,” he cautions. People may overvalue income, weather, or even health in their pursuit of happiness, because System 1 instinctively exaggerates whatever is in the spotlight.

This insight has ethical implications: policies and life choices based on remembered narratives may neglect the actual experiences that constitute life. Kahneman provocatively asks: Should we aim to have a good life, or to have a life that is remembered as good?

Two Selves in Conflict

The conflict between these selves reveals the storytelling nature of the human mind. Memory is not a recording device but a scriptwriter, focused on coherence rather than accuracy. The Remembering Self makes decisions for the Experiencing Self (e.g., booking another painful vacation) even if the actual experience was negative. This mismatch shows how the mind’s architecture leads to self-deception, even in deeply personal realms like joy, sorrow, and meaning.

With Thinking Fast and Slow, Kahneman has not merely written a psychology book; he has mapped the architecture of thought, emotion, and decision-making with profound consequences.

The journey through Systems 1 and 2, through heuristics, overconfidence, economic irrationality, and finally the very fabric of lived experience, leaves the reader with a clear message: we are not who we think we are—but understanding how we think gives us power.

Critical Analysis

Evaluation of Content: Does the Book Deliver?

Absolutely. Kahneman constructs a persuasive, evidence-based narrative that reveals how the fast-thinking System 1 and the slow-thinking System 2 operate—often in harmony but frequently in conflict. His use of empirical studies is not just rigorous; it’s revelatory. One such study is the “Linda problem,” where even statistically literate people violate the basic rules of probability due to the conjunction fallacy:

“Linda is 31 years old, single, outspoken, and very bright… Is it more probable that Linda is a bank teller or a bank teller and active in the feminist movement?”

Most people choose the second option, even though logic dictates that adding more conditions lowers the probability. This is a perfect illustration of how System 1’s intuitive judgments can override System 2’s rationality.

Thinking Fast and Slow not only fulfills its stated purpose—to “improve the ability to identify and understand errors of judgment and choice”—but does so in a way that is immersive, reflective, and constantly challenges the reader’s own cognitive habits.

Style and Accessibility: A Book for the Many, Not Just the Few

Despite the complex nature of behavioral economics and cognitive psychology, Kahneman’s writing is lucid, engaging, and remarkably personal. He doesn’t lecture from a pedestal; instead, he walks beside you, pointing out cognitive illusions as you both stumble through them. Consider his candid self-reflection:

“Even statisticians were not good intuitive statisticians.”

This disarming honesty enhances the book’s accessibility. It’s intellectual without being elitist—a rare achievement in scientific literature. His use of real-life examples and experiments invites the reader not only to understand the science but to experience it viscerally.

Themes and Relevance: Why Thinking Fast and Slow Matters

The themes of Thinking Fast and Slow are as urgent today as ever. From anchoring effects in consumer pricing to availability heuristics that distort media narratives, Kahneman’s work remains highly applicable in today’s information-saturated world. In the age of misinformation and impulsive digital choices, his insights about System 1’s susceptibility to error and System 2’s laziness are deeply relevant.

He shows how seemingly trivial changes in framing—such as describing a treatment as “90% effective” rather than having a “10% failure rate”—can profoundly alter our choices, even among professionals like doctors and judges. This has implications for everything from marketing and public health to politics and education.

Author’s Authority: Why Listen to Kahneman?

Kahneman’s authority is unquestionable. A Nobel Laureate in Economics despite being a psychologist by training, he shattered the boundary between disciplines. His foundational work with Amos Tversky was not merely academic—it laid the groundwork for behavioral finance, predictive analytics, and policy-making models globally. Their 1974 paper, “Judgment under Uncertainty,” remains one of the most cited in social science.

Moreover, his humility enhances his credibility. He readily admits to falling into the very traps he outlines, reminding readers that awareness is a lifelong discipline—not an instant cure.

Strengths and Weaknesses

Strengths: Why Thinking Fast and Slow is a Modern Classic

✅ Intellectual Depth with Narrative Clarity

Kahneman excels at making the complex understandable. One of the most powerful strengths of the book lies in its clear exposition of difficult psychological mechanisms, such as the two-system framework, with tangible examples. Whether it’s predicting Steve’s occupation based on a personality sketch (representativeness heuristic) or falling victim to WYSIATI (“What You See Is All There Is”), readers can not only understand these ideas—they experience them as they read.

✅ Empirical Grounding

What sets this book apart from other popular psychology works is its robust scientific foundation. Every major claim is backed by real-world experiments.

Take, for instance, the famous anchoring effect, in which subjects’ estimates of Gandhi’s age at death varied wildly depending on whether they were first asked if he lived past 114 or 35. The seemingly irrelevant number anchored their responses.

It’s a stark illustration of how irrational our judgments can be—even on questions with known answers.

✅ Cross-Disciplinary Appeal

Few books traverse as many intellectual domains with as much agility. From psychology and economics to law, medicine, and public policy, Thinking Fast and Slow informs a multidisciplinary understanding of human behavior.

✅ Emotional Honesty

Kahneman’s tone is disarmingly human. His confession that even seasoned experts—including himself—are susceptible to irrational thinking is refreshingly honest:

“Overconfidence is fed by the illusory certainty of hindsight.”

This authenticity strengthens reader trust and makes the book deeply relatable.

Weaknesses: Where the Book Might Fall Short

❌ Cognitive Fatigue from Repetition

While rich in content, the book occasionally reiterates the same point across multiple chapters, which can lead to mental fatigue—ironically a topic discussed in the book itself. Some readers may feel that concepts like heuristics or overconfidence bias are over-labored across different chapters, potentially diluting their impact over time.

❌ Priming Studies Under Scrutiny

Some of the priming studies Kahneman references—where subtle cues influence behavior—have come under criticism during the psychological replication crisis. Though Kahneman himself later addressed these concerns with integrity, their presence in the book has sparked skepticism in certain academic circles.

❌ System 2 Is Not Always the Hero

While Kahneman presents System 2 as the corrective to System 1’s errors, there are moments when this seems idealized. System 2 is not infallible—it can rationalize poor decisions or act lazily when effort is required. The book acknowledges this but does not always explore its nuances as deeply as it does System 1’s flaws.

❌ Limited Prescriptive Guidance

For a book that deeply dissects cognitive errors, Thinking Fast and Slow is somewhat light on actionable advice. Readers may ask: “Now that I know about these biases, what can I do to correct them?” The book occasionally gestures toward solutions—such as using algorithms over intuition in hiring decisions—but stops short of offering a comprehensive toolkit.

Reception, Criticism, and Influence

Global Acclaim: A Resounding Success

Since its release in 2011, Thinking Fast and Slow has earned near-universal praise. It became an immediate New York Times bestseller, and by 2012, had sold over a million copies worldwide. It received the National Academies Communication Award and was hailed by The Economist, The Guardian, and The New York Times as one of the best books of the year.

Critics commended it not just for its intellectual rigor, but for making complex psychological theories accessible to general readers. According to The Financial Times, the book is “a tour de force of scholarship and clear writing.”

Academic Response: Widely Cited and Contested

In academia, Kahneman’s work has been transformative. The book synthesized decades of his research, including the landmark article “Judgment Under Uncertainty: Heuristics and Biases,” which continues to be one of the most-cited papers in the social sciences.

Scholars in economics, psychology, philosophy, and law have applied his insights to risk assessment, legal judgment, and public policy design. For example, the concept of framing effects is now a foundational idea in behavioral public administration, showing how System 1’s susceptibility to context can influence voting behavior or medical decisions.

However, not all responses have been uncritical.

Criticism: Replication and Real-World Complexity

One major area of criticism stems from the replication crisis in psychology. Some priming experiments Kahneman cited—studies suggesting that subtle cues can unconsciously affect behavior—have failed to replicate in subsequent research. Kahneman acknowledged this issue in public forums, urging caution and further validation.

Furthermore, critics like Paul Bloom (in Against Empathy) argue that Kahneman underestimates human rationality, suggesting people are more thoughtful than the book portrays. Bloom writes:

“People are rational because they make thoughtful decisions in their everyday lives… not as stupid as scholars think they are.”

This critique suggests that Thinking Fast and Slow, while revealing the flaws of cognition, may sometimes present an overly bleak picture of human rational capacity.

Cultural and Industry Influence: From Baseball to Wall Street

The book’s influence goes far beyond academia. It has found resonance in unexpected sectors:

- Finance: Portfolio managers and traders use Kahneman’s insights on loss aversion and overconfidence to temper emotional investment decisions.

- Sports: Baseball scouts now leverage concepts like anchoring bias to reduce cognitive distortions in talent evaluation.

- Technology: UX designers and product managers draw from Kahneman’s analysis of cognitive ease to create more intuitive user experiences.

Notably, Nicholas Nassim Taleb, author of The Black Swan, called Thinking Fast and Slow “as important as Adam Smith’s Wealth of Nations or Freud’s Interpretation of Dreams”.

Quotations from Thinking Fast and Slow

On the Illusion of Intuition

“We are often confident even when we are wrong, and an objective observer is more likely to detect our errors than we are.”

— Daniel Kahneman, Introduction

A defining line that captures the danger of overconfidence. This quote is often cited in discussions around investment decisions, hiring, and leadership psychology.

On the Halo Effect

“When the handsome and confident speaker bounds onto the stage, you can anticipate that the audience will judge his comments more favorably than he deserves.”

— Daniel Kahneman, Introduction

A striking example of System 1 thinking—intuitive, emotional, and prone to bias. This line is powerful in contexts ranging from advertising to politics.

On the Nature of Expertise

“Intuition is nothing more and nothing less than recognition.”

— Cited from Herbert Simon, quoted by Kahneman

Used to demystify the concept of “gut feeling,” this quote has become a favorite among professionals who emphasize the role of experience in high-stakes decision-making.

On the Role of Heuristics

“People tend to assess the relative importance of issues by the ease with which they are retrieved from memory—and this is largely determined by the extent of coverage in the media.”

— Daniel Kahneman, Introduction

A sharp commentary on the availability heuristic—a crucial theme in discussions around media influence, misinformation, and public policy.

On the Experiencing vs. Remembering Self

“Odd as it may seem, I am my remembering self, and the experiencing self, who does my living, is like a stranger to me.”

— Daniel Kahneman, Part V: Two Selves

One of the most philosophical and hauntingly human insights in the book. This quote is often used in debates about happiness, memory, and the psychology of time.

On Framing and Choice

“Different ways of presenting the same information often evoke different emotions.”

— Daniel Kahneman, Part IV: Choices

Essential in behavioral economics, this quote illustrates the framing effect, relevant to marketing, healthcare, and legal decisions.

On Cognitive Bias and System 1

“System 1 is designed to jump to conclusions from little evidence.”

— Daniel Kahneman, Part I: Two Systems

Concise, clinical, and profoundly accurate—this line sums up the entire foundation of intuitive error.

On Rationality

“The idea that the future is unpredictable is undermined every day by the ease with which the past is explained.”

— Daniel Kahneman, Part III: Overconfidence

A sobering reflection on hindsight bias—a pervasive error in investing, strategy, and history writing.

These quotes enrich our understanding of how we think, act, and decide—and more importantly, why we so often get it wrong.

Strengths Recap

- Exceptionally clear articulation of complex psychological principles.

- Rich empirical backing from decades of foundational research.

- Wide-ranging application across law, economics, medicine, policy, and everyday life.

- Human, honest, and often humorous tone.

- SEO magnets: System 1 and System 2, heuristics, availability bias, prospect theory, decision-making under risk, behavioral finance, cognitive illusions.

⚠️ Weaknesses Recap

- Some experimental findings, especially in priming, have been challenged during the replication crisis.

- At times repetitive or dense, especially for non-academic readers.

- Lacks a comprehensive toolkit for applying lessons in daily decision-making.

Who Should Read This Book?

✅ Must-Reads for:

- Students and scholars of psychology, economics, law, or policy.

- Investors and executives seeking to improve rationality in decision-making.

- UX designers, marketers, educators, and healthcare professionals interested in behaviorally informed design and communication.

- Anyone seeking to understand how thinking works—and how it often doesn’t.

❌ May Not Suit:

- Readers seeking light, motivational content or quick tips.

- Those uninterested in self-examination or critical introspection.

Final Thought: A Book That Changes How You Think

After reading Thinking Fast and Slow, the world looks different. You will hear a politician speak and sense an anchoring bias. You’ll second-guess your gut when hiring a new employee. You’ll pause before reacting in anger, recognizing your System 1 response. And perhaps most importantly, you’ll begin to doubt—intelligently, and productively.

In Kahneman’s own words:

“The expectation of intelligent gossip is a powerful motive for serious self-criticism.”

That’s the lasting impact of this book: it doesn’t just improve how we think; it improves that we think at all.

Conclusion and Recommendations

Final Impressions: A Masterpiece of the Mind

Thinking Fast and Slow is not merely a book. It’s an intellectual awakening—an immersive exploration of how our minds truly work, and often how they betray us. Daniel Kahneman has gifted us a framework to decode the mechanisms of judgment, cognitive bias, and decision-making under uncertainty that shape our lives in ways we rarely notice.

He distills decades of research into deeply human insights. The division between System 1 and System 2 becomes more than a model—it becomes a lens through which to understand conversations, conflicts, markets, media, and even our own internal narratives. Kahneman invites readers to turn the mirror inward and recognize that what we “know” is often just what we feel confident about—and that confidence is no guarantee of correctness.

“We are blind to our blindness. We have very little idea of how little we know.”

— Daniel Kahneman

That’s the beauty of this book. It doesn’t just inform—it humbles.